I got a message on LinkedIn a little while ago from a reader (Hi Marcelo!) asking my thinking around Contract Testing. I wrote up a brief answer, but there's definitely some more thoughts bouncing around in my head... so it's ramblin' time!

For those unaware "Contract Testing" is writing tests against an external system to detect if the 'contract' changes. "Contract" in this case is going to be the data structure it accepts and/or returns.

While I'm fine with "contract testing" to applying to a library being included, for this post I'll confine it to REST (like) calls.

Q: Do you (me, the author, this guy) do Contract Testing?

A: ... It depends.

The answer is (almost) always - "It depends" (almost because it depends)

To be a little more honest about it - No; I don't do Contact Testing. ... Except when I do.

For most of my career I've interacted with endpoints I controlled, so... no; I'm not writing "Contract Tests"... I write Unit Tests... integration tests? end to end test? Whatever; I get a payload and I verify my system returned the right thing. Because that's the code I control. It's also not across the network.

And if it isn't endpoints I control, it's endpoints controlled by a team in the same company. If things break, I'm close enough to literally wring necks.

My favorite has been when I wrote the APIs that got called, and then switched to a team developing the product that consumed the endpoints.

When neither of those are true... It's a 3rd party owned and controlled API. I still don't... until I've lost trust in the API; Or I don't know enough to trust the API

If it's not a well documented API I'll write some test around the API to provide the reference material. It's not quite "Contract Testing" but providing documentation on what the contract is in the simplest way we can. It does look at the contract and validates what it is.

I don't know enough to trust the API. I don't know enough of what the API is doing to trust it.

I'll generally only write enough of these tests to provide enough examples of how I'll be using it to serve as reference material to write code consuming the APIs. More tests get added as I need to understand more parts of the system.

The main time I'll do Contract Testing is when the payloads stop making sense. It might be due to poor documentation; but it's more because I can't trust the API.

For me, normally, a thought out API behaves consistently. Once it clicks for me how they think about their API, I can understand the decisions they'll make and predict how the 'contract' will look. It makes sense.

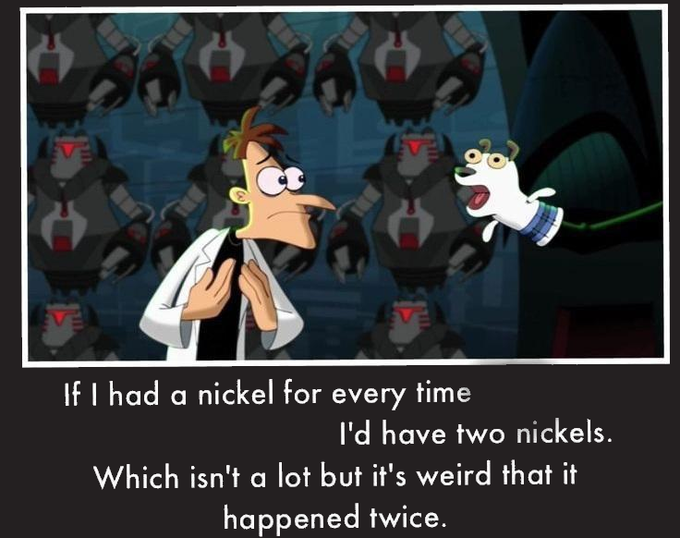

I've had a few that decided making sense wasn't worth the effort and did... I don't know what.

Before I get into an API I had to write Contract Tests around to understand WTF... let's recap:

* Good designs with excellent documentation are great. Probably never going to write Contract Tests. Think Stripe API.

* Bad designs with excellent documentation... I'll be angry everytime I have to do something with it... but no need for Contract Tests.

* Good designs with poor documentation ... This is where I'll use reference Contract Tests.

* Bad (consisten) design with poor documentation ... still just reference tests. Consistency is comprehensible. Just takes some time to understand the bad thinking to understand the design. I'll do this with code that hurts me... once I understand how they were thinking, the system is easy to navigate.

Finally - Inconsistent APIs even with good documentation are going to show that they NEED Contract Tests around it.

The Example

I don't remember the 3rd party API we were using, or I would 100% name them. We had to make a call and would get back a collection of objects. Perfect. Used the pretty solid documentation to consume other parts of their API so... kept going with this one.

And then the errors started. In prod. I love being on call. I didn't fully trust the API. There was some sketchy feelings in it. Things that just ... didn't quite make sense. Designs felt a little off. But was consistent with the documentation, so... OK. Good (enough) documentation.

I had logging in the system so I could reconstruct what we'd be sending. It took some hunting to get the pieces put together and I started to write tests against the API.

Does this even count as "Contract Testing"? I wasn't testing the contract... I was testing their idiocy.

In the payload there was a collection of objects we would get back.

{

"theCollection":[

{ Object Type }

]

}

Something simple like that. Our test environment never had issues. Always got the results we wanted.

I don't recall the whole process, it was a couple years ago... But there were some conditions we couldn't repo in lower environments. It was a little too curated. So I wrote tests to FORCE calls of things that didn't exist. Or to be overly filtered and get no results back.

I was able to generate the scenario where we'd get back a DIFFERENT OBJECT in theCollection field. The one that we expect an array in. Wow. That... wow. I was baffled. I SCOURED the documentation looking for where such madness existed.

If I was GENEROUS and took the error handling for API-A and assumed it also applied for API-B; but with a different field name... then... sure... yeah... the same handling worked.

I had many painful sighs... but was covered in tests showing the idiocy of the design.

And prod broke again.

FUUUUUUUU..... Fine. Dug into it deeper and found a different scenario could happen.

When this happened, theCollection was a string. ...

Yeahhhhh.... I had a lot of conditional around the specific endpoint. I had a lot of tests around that call. Bad data in every way I could imagine. Didn't discovery any more... but I covered it in tests.

I think this is the closest I've come to writing Contract Tests. It wasn't there to validate the contract; but to demonstrate the contract.

Write it out

I learn things when I write out my thoughts. When I finished the LinkedIn response, I'd still say that I'd do, or that I have done, Contract Testing. Having written this out; I don't think I ever have, or ever would.

I'll write tests against an API to document the behavior of the API. Not for purposes of detecting breaking changes - but for the purpose of documenting it. Idiotic things like having the type of a filed be an array or an object or a string or a null. Yeah... they also returned a null in a scenario.

A suite of contract tests aren't going to be useful unless you're running them on a schedule to detect these breaking changes. And if you do detect the change... you probably already know things are broken from getting a phone call.

If you break and you can't identify why - your system is not gonna be fun to troubleshoot. Most of my systems the stack trace will be informative enough that I know what API was called because of what class trying to consume the data threw the exception. One of the benefits of MicroObjects - If X breaks, there's only gonna be one thing that could happen for X to throw the exception. If it's a contract issue, there's only one api that'd be called.

To fix it - just that one class has to be updated. Or just the 3rd party abstraction to handle the format difference.

I used a chain of responsibility pattern for the issue I encountered. It'd check the type and return a type for the contract structure that was the API returned.

It was simple to handle once I documented the API's behavior with tests.

It Depends - except it doesn't

Due to writing this out... I don't write Contract Tests. I'll write tests against an API to document undocumented responses, or od responses... but not simple to "see if it changes".

Q: Do I write Contract Tests?

A: No; I can't think of non-legacy system reasons to write them.