It's been a month... SIGH oh well. I'm bad at this.

What have I been doing? Lots of ... stuff?

OK; not much.

Let's check my MtgDiscovery.com git history and see if anything interesting is going on there...

Nope. Not a lot. Probably would have something if I used good commit practices.

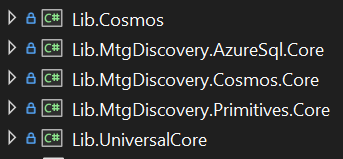

The biggest is the DB migration. It worked well. A lot of commonality fell into place between how I have the CosmosDb and AzureSql structured.

The commonality proved itself VERY useful in a "collection update" component that's currently required.

Background

How I store the cards entered is separate from the card information itself. In Cosmos this was a separate collection and now it's in AzureSql.

As I'm customizing the incoming data to represent sets as I collect them, sometimes things don't go right.

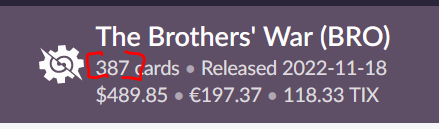

As an example, the source data has the latest "Brother's War" set with 387 cards.

Ummm... No. The cards CLEARLY indicate that there are 287 cards

So... what's the difference?

The "non-booster" cards. I call them "Extended". These are the borderless, alternate art, extended art... Things not common in the draft-boosters. They aren't "part" of the core set to me.

I store set data with the collector information, not just the CardId. Now, if I'm entering data and a set happens to include the extended cards (Looking at you "The Brother's War".

If I add the extended cards, they'll be added with the set Id of "The Brother's War". Once I correct the data ingestion and have "The Brother's War Extended" set get generated, the entered cards have the wrong set id - this needs to be updated.

Hence my tool.

Simplicity

I built the tool to pull and update collector based off of set information all from cosmos. With the migration of collector data to AzureSql, the tool needs to be updated.

The Cosmos Queries were the same, but needed all new stuff for AzureSql.

It was ... simple. Like... "holy shit it worked the first time" simple. It didn't, but tiny issue unrelated to the database updates.

The pattern I have in place for cosmos was followed for AzureSql. Given some implementation details, I couldn't use the exact interfaces for both systems - A shock, I assure you.

But, it provided just enough that a container of the db-unique interafaces could implement the same interface irrelevant of the underlying system.

And... it just... worked.

My mind is pretty blown at how it worked.

I expect it to be a huge shock that I attribute the ease in migration to the MicroObjects style of coding.

The abstractions are still a work in progress. I think there's some refactoring to improve commonality. Which ... I'll get to. :) It's really nice to have a project where I can iterate and experiment on things and it's not just a thought-experiment or a simplistic example.

It's not a complex site; I could probably get away with calling it a CRUD app. But I'm applying my practices as best I can, with the constraint of "I want to use it". So... I will take shortcuts to get something done so I can use the site. There's a few tools that are super procedural looking because I wanted the result.

The more I touch that code, the more it gets refactored into MicroObjects.

Then there's the migration code - the literal "run once" to move my collection data from cosmos to AzureSql... it's... a long method

public async Task Process()

{

s_logger.LogInformation("Already Ran. Do not re-run");

return;

s_logger.LogInformation("Starting Process...");

ICollection<JObject> collections = await new DiscoveryCollectionCosmosQuery().ExecuteAsync();

IAzureUpsert<CollectorCardModel> upsertCollectorCardAzureQuery = new UpsertCollectorCardAzureQuery();

foreach (dynamic collection in collections)

{

s_logger.LogInformation($"Processing collection {collection.id}");

JObject sets = collection.body.set;

JObject cards = collection.body.card;

foreach (KeyValuePair<string, JToken> card in cards)

{

s_logger.LogInformation($"Processing card {card.Key}");

string cardSetId;

try

{

cardSetId = CardSetId(sets, card.Key);

}

catch

{

continue;

}

CollectorCardModel cardModel = new()

{

CollectorId = collection.id,

CardId = card.Key,

SetId = cardSetId

};

if (Guid.TryParse(cardModel.CollectorId, out Guid _) is false ||

Guid.TryParse(cardModel.CardId, out Guid _) is false ||

Guid.TryParse(cardModel.SetId, out Guid _) is false)

{

s_logger.LogInformation($"CardModel has invalid Ids {JsonConvert.SerializeObject(cardModel)}");

continue;

}

foreach (JToken jToken in card.Value)

{

if (jToken is not JProperty jProperty) continue;

switch (jProperty.Name)

{

case "unmodified":

cardModel.Unmodified = jProperty.Value.Value<int>();

break;

case "altered":

cardModel.ArtistAltered = jProperty.Value.Value<int>();

break;

case "signed":

cardModel.Signed = jProperty.Value.Value<int>();

break;

case "artistProof":

cardModel.ArtistProof = jProperty.Value.Value<int>();

break;

}

}

s_logger.LogInformation($"Created card entry {JsonConvert.SerializeObject(cardModel)}");

await upsertCollectorCardAzureQuery.UpsertAsync(cardModel);

}

}

foreach (string noSetCard in _noSetCards)

{

s_logger.LogInformation($"Unable to assign set to {noSetCard}");

}

}This isn't broken out because it won't be maintained. This is a long method... almost 70 lines. AND I USED A SWITCH!!!!

SHAME!

Brute force logic implementation of the move.

The DB move was actually kinda fun, and the slow shift to commonality of abstractions is fun to see/do.

As things get refactored into an abstraction that's pretty stabalized I'll extract them into a library project and migrate things to that

It helps keep the clutter done.

OK... kinda lost my lack of train of thought for this post... Not sure why you still read this madness!